Comparative Analysis of Advanced AI Models (NLP) for Customer Reviews

- Category: AI - NLP

- Client: Portfolio Project

- Project date: 23-04-2024

- Project URL: Click here

Portfolio detail

Objectives:

- Analyze Review Sentiment

- Compare Model Performance

- Gain Sentiment Insights

Libraries Used:

This Python program uses various libraries for tasks such as sentiment analysis, data manipulation, and visualization:

- Pandas

- Numpy

- Matplotlib.pyplot

- Seaborn

- NLTK (Natural Language Toolkit)

- tqdm

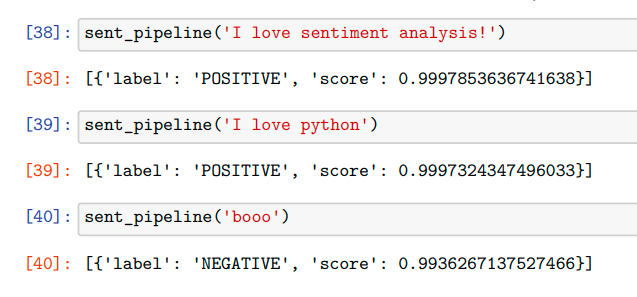

- transformers

- torch

Summary:

The project is a walkthrough of a sentiment analysis project using Python. It covers both traditional approaches using NLTK's Vader model and more advanced approaches using Hugging Face's pre-trained RoBERTa model. The dataset used consists of Amazon Fine Food reviews, containing text reviews and corresponding star ratings ranging from one to five.

- Data Loading and Exploration: The dataset containing reviews and star ratings is loaded using Pandas, and basic exploratory data analysis (EDA) is performed.

- Text Processing and Sentiment Analysis with NLTK: The NLTK library is used to tokenize sentences, tag parts of speech, chunk entities, and perform sentiment analysis using the Vader model.

- Sentiment Analysis with RoBERTa: Hugging Face's RoBERTa model is utilized for sentiment analysis, leveraging its advanced capabilities to capture contextual information in text.

- Comparison and Visualization: The results from both models are compared and visualized using Seaborn's pair plot and bar plots to assess their performance and identify any discrepancies or patterns.

- Example Analysis: Specific examples are examined where the sentiment analysis models exhibit unexpected behavior, highlighting the strengths and limitations of each approach.